TL;DR:

Scaling AI isn’t just about the model — it’s about the data foundation. Without a unified, modern platform, most AI projects stay stuck in pilot mode. At the recent Microsoft Fabric Conference, we saw firsthand how Fabric delivers that missing foundation: one copy of data, integrated analytics, built-in governance, and AI-ready architecture. The results are faster scaling, higher accuracy, and greater ROI from AI investments.

The illusion of quick wins with AI

Last week, our team attended the Microsoft Fabric Conference in Vienna, where one theme came through loud and clear: AI without a modern data platform doesn’t scale.

It’s a reality we’ve seen with many organisations. AI pilots often succeed in a controlled environment — a chatbot here, a forecasting model there — but when teams try to scale across the enterprise, projects stall.

The reason is always the same: data. Fragmented, inconsistent, and inaccessible data prevents AI from becoming a true enterprise capability. What looks like a quick win in one corner of the business doesn’t translate when the underlying data foundation can’t keep up.

The core problem: data that doesn’t scale

For AI initiatives to deliver value at scale, organisations typically need three things in their data:

- Volume and variety — broad, representative datasets that capture the reality of the business.

- Quality and governance — data that is accurate, consistent, and compliant with policies and regulations.

- Accessibility and performance — the ability to access and process information quickly and reliably for training and inference.

Yet in many enterprises, data still lives in silos across ERP, CRM, IoT, and third-party applications. Legacy infrastructure often can’t handle the processing power that AI requires, while duplicated and inconsistent data creates trust issues that undermine confidence in outputs.

On top of that, slow data pipelines delay projects and drive up costs. These challenges explain why so many AI initiatives never move beyond the pilot phase.

The solution: a modern, unified data platform

A modern data platform doesn’t just centralise storage — it makes data usable at scale.That means unifying enterprise and external data within a single foundation, ensuring governance so information is clean, secure, compliant, and reliable by default.

It must also deliver the performance required to process large volumes of data in real time for demanding AI workloads, while providing the flexibility to work with both structured sources like ERP records and unstructured content such as text, images, or video.

This is exactly the gap Microsoft Fabric is built to close.

Enter Microsoft Fabric: AI’s missing foundation

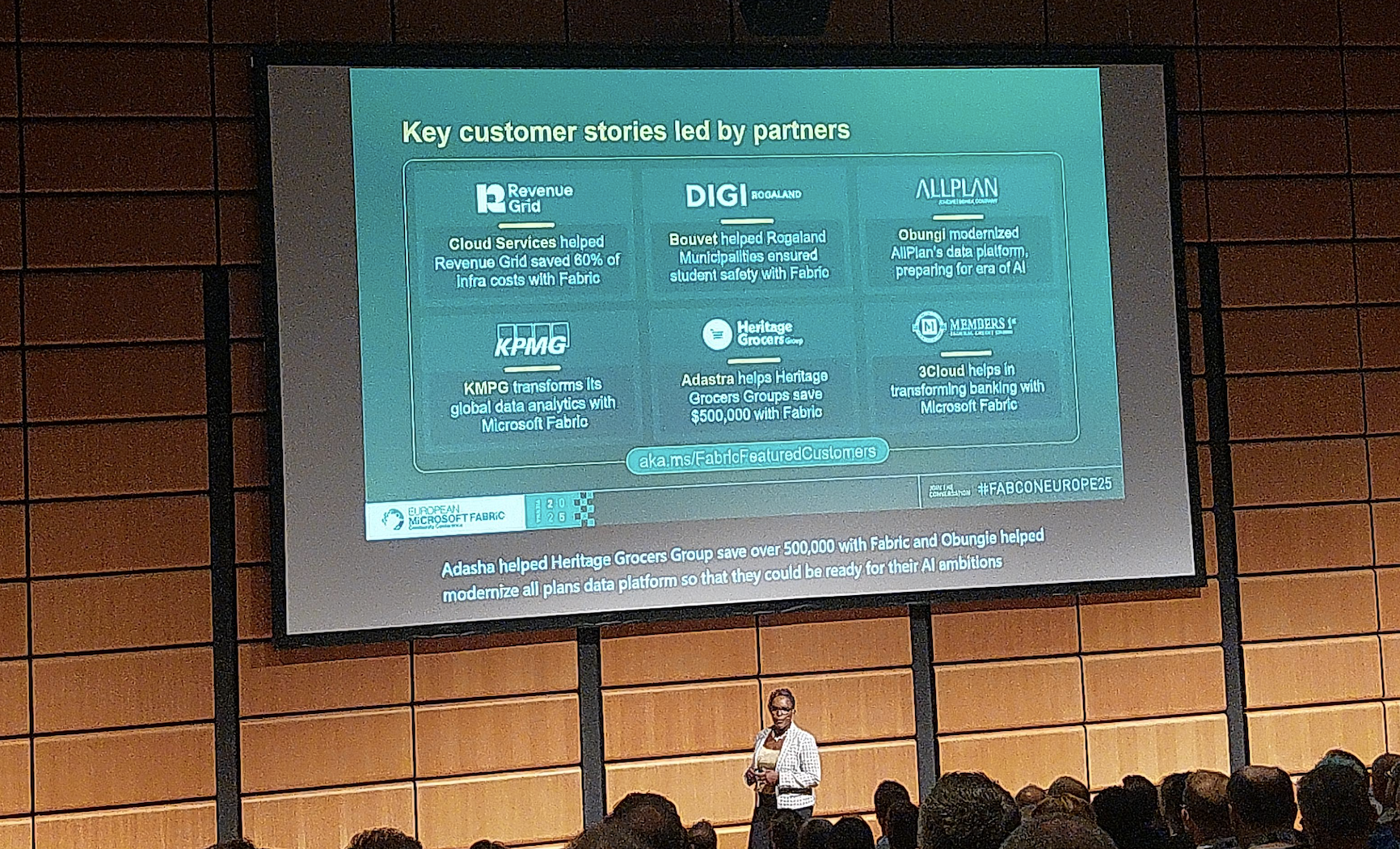

At the conference, we heard repeatedly how Fabric is turning AI projects from disconnected experiments into enterprise-scale systems.

Fabric isn’t a single tool. It’s a complete data platform designed for the AI era — consolidating capabilities that used to require multiple systems:

- OneLake, one copy of data — no duplication, no confusion; store once, use everywhere.

- Integrated analytics — data engineering, science, real-time analytics, and BI in one platform.

- Built-in governance — security, compliance, and lineage embedded by design.

- AI-ready architecture — seamless with Azure ML, Copilot, and Power Platform.

- Dataverse + Fabric — every Dataverse project is now effectively a Fabric project, making operational data part of the analytics foundation.

- Improved developer experience — new features reduce friction and make it easier to turn raw data into usable insights.

- Agentic demos — highlight why structured data preparation is more critical than flashy models.

- Fabric Graph visualization — reveals relationships across the data landscape and unlocks hidden patterns.

The business impact

The message is clear: Fabric isn’t just a data tool — it’s the foundation that finally makes AI scale.

Early adopters of Fabric are already seeing results:

- 70% faster data prep for AI and analytics teams.

- Global copilots launched in months, not years.

- Lower infrastructure costs thanks to one copy of data instead of endless duplication.

Make your AI scalable, reliable, and impactful with Microsoft Fabric

AI without a modern data platform is fragile. With Microsoft Fabric, enterprises move from isolated pilots to enterprise-wide transformation.

Fabric doesn’t just modernise data. It makes AI scalable, reliable, and impactful.

Don’t let fragile data foundations hold back your AI strategy. Talk to our experts to explore how Fabric can unlock AI at scale for your organisation.

TL;DR:

Public AI tools like ChatGPT create security and compliance risks because you can’t control where sensitive data goes. Copilot Studio solves this by running inside your Microsoft 365 tenant, inheriting existing permissions, enforcing tenant-level data boundaries, and aligning with Microsoft’s Responsible AI standards and residency protections. With proper governance — from data cleanup and Data Loss Prevention to connector control and clear usage policies — you can enable safe, compliant AI adoption that builds trust and empowers employees without risking data leaks or reputational damage.

“How do we give employees access to AI tools without sensitive data leaking to public models?”

It’s the first question IT operations and compliance leaders need to consider when AI adoption comes up — and for good reason. While tools like ChatGPT are powerful, they aren’t built with enterprise governance in mind. As a result, AI usage remains uncontrolled, potentially exposing sensitive information.

The conversation is no longer about if employees will use AI, but how to allow it without risking data loss, non-compliance, or reputational damage.

In this post, we explore how you can deploy Copilot Studio securely to give teams the AI capabilities they want while keeping data firmly within organisational boundaries.

The governance challenge

Most free, public AI tools have one major drawback: you can’t control what happens to the data you give them. Paste in a contract or an HR document, and it could be ingested into a public model with no way to retract it.

For IT leaders, that’s an impossible position:

- Block access entirely and watch shadow AI usage grow.

- Allow access and risk sensitive data leaving your control.

What you need is a way to enable AI while ensuring all information stays securely within the organisation’s boundaries.

How Copilot Studio handles security and data

Copilot Studio is designed to work with — not around — your existing Microsoft 365 security model. That means:

- Inherited permissions: A Copilot agent can only retrieve SharePoint or OneDrive files the user already has access to. If permissions are denied, the agent can’t access the file. No separate AI-specific access setup is required.

- Tenant-level data boundaries: All processing happens within Microsoft’s secure infrastructure, backed by Azure OpenAI. There’s no public ChatGPT endpoint — data stays within your private tenant.

- Responsible AI principles: Microsoft applies its Responsible AI Standard, ensuring AI is deployed safely, fairly, and transparently.

For European customers, Copilot Studio also aligns with the EU Data Boundary commitment, keeping data processing inside the EU wherever possible. Similar residency protections apply globally under Microsoft’s Advanced Data Residency and Multi-Geo capabilities.

Governance in practice

Deploying Copilot Studio securely takes more than a few clicks. Successful rollouts include:

- Data readiness

Many organisations have poor data hygiene — redundant, outdated, or wrongly shared files. Before enabling Copilot, clean up data stores, remove unnecessary content, and confirm access rights. If Copilot can access it, so can employees with matching permissions.

- Data loss prevention

Use Microsoft’s built-in Data Loss Prevention (DLP) capabilities to stop Copilot from accessing or exposing sensitive information. At the Power Platform level (which covers Copilot Studio), DLP policies focus on controlling connectors; for example, blocking connectors that could pull data from unapproved systems or send it outside your governance boundary.

Beyond Copilot Studio, Microsoft Purview DLP offers a broader safety net. It protects sensitive data across Microsoft 365 apps (Word, Excel, PowerPoint), SharePoint, Teams, OneDrive, Windows endpoints, and even some non-Microsoft cloud services.

By combining connector-level controls with Purview’s sensitivity labels and classification policies, you can flag high-risk content such as medical records or salary data, and prevent it from being surfaced by Copilot.

Configure DLP policies to prevent Copilot from retrieving information from sensitive or confidential files, such as medical records or salary data. Use sensitivity labels to flag and restrict high-risk content.

- Connector control

Remove unnecessary connectors to prevent Copilot from accessing data outside your governance framework.

- Clear internal guidance

Publish company-specific usage rules. Load the documentation into Copilot Studio so employees can query an internal knowledge base before asking questions that rely on external or unverified sources.

- Escalation paths

For complex or sensitive questions, integrate Copilot Studio with ticketing systems or expert routing — for example, automatically opening an omnichannel support case.

Building trust in AI adoption

Security isn’t the only barrier to AI adoption — trust plays a critical role too. Employees, legal teams, and executives need confidence that AI tools won’t create new liabilities. Microsoft has taken several steps to address these concerns:

- Copyright protection: Under its Copilot Copyright Commitment, Microsoft stands behind customers if AI-generated output triggers third-party copyright claims, covering legal defence and costs.

- Compliance leadership: Microsoft has been proactive in aligning AI services with global and regional legislation, from the EU Data Boundary to sector-specific regulations.

- Responsible use by design: The company’s Responsible AI principles ensure AI is developed and deployed with fairness, accountability, transparency, and privacy as core requirements.

For IT leaders, this means adopting Copilot Studio isn’t just a technical exercise but an opportunity to establish governance, legal assurance, and ethical use standards that will support AI adoption for years to come.

Why AI governance for Copilot Studio can’t wait

Microsoft has been proactive on AI legislation and compliance since the start, with explicit commitments on data protection and even AI copyright indemnification. But no matter how robust the vendor’s safeguards, governance still depends on your internal policies and configuration.

The earlier you establish these guardrails, the sooner you can empower teams to innovate without risk — and avoid retrofitting controls after a security incident.

Need help? Book your free readiness audit to see exactly where your governance gaps are and how to fix them before rollout so you can deploy Copilot Studio with confidence.

Useful resources

TL;DR:

Legacy ERP workflows rarely map cleanly into Dynamics 365. Familiar screens, custom approvals, patched permissions often break because Dynamics 365 enforces a modern, role-based model with standardised navigation and workflow logic. This isn’t a bug but the natural result of moving from heavily customised systems to a scalable platform. To avoid adoption failures, don’t replicate the old system screen by screen. Focus on what users actually need, rebuild tasks with native Dynamics 365 tools, redesign security around duties and roles, and test real scenarios continuously. Migration is your chance to modernise, and if you align workflows with Dynamics 365 patterns, you’ll gain a system that’s more secure, more scalable, and better suited to how your business works today.

Why your ERP workflows fail after migration

You’ve migrated the data. You’ve configured the system. But now your field teams are stuck.

They can’t find the screens they used to use. The approvals they rely on don’t trigger. And the reports they count on? Missing key data.

This isn’t a technical glitch. It’s a user experience mismatch.

When companies move from legacy ERP systems to Dynamics 365, the shift isn’t just in the database. The entire way users interact with the system changes, from navigation paths and field layouts to permission models and process logic.

If you don’t redesign around those changes, your ERP transformation will quietly break the very workflows it was meant to improve.

This is the fourth part of our series on ERP migration. Read more about

- Our playbook for a successful ERP migration

- Reasons your ERP migration takes longer than expected, and

- How to speed up ERP migration without compromising on quality

Dynamics 365 isn’t your old ERP — and that’s the point

Legacy systems were often customised beyond recognition. Buttons moved, fields were renamed, permissions manually patched. As a result, they were highly tailored systems that made sense to a small group of users but were nearly impossible to scale or maintain.

Dynamics 365 takes a different approach. It offers a modern, role-based experience that works consistently across modules. But that means some of your old shortcuts, forms, and approvals won’t work the same way — or at all.

This can catch users off guard. Especially if no one has taken the time to explain why the system changed, or to align the new setup with how teams actually work.

Where the breakage usually happens

Navigation

Field engineers can’t find the work order screens they’re used to. Finance can’t locate posting profiles. Procurement doesn’t know how to filter vendor invoices. Familiar menus are gone and replaced with new logic that’s more powerful, but also less intuitive if you’re coming from a heavily customised legacy system.

Permissions

Old ERPs often relied on manual access grants or flat permission sets. In Dynamics 365, role-based security is more structured, and less forgiving. If roles aren’t mapped correctly, users lose access to critical features or gain access they shouldn’t have.

Workflow logic

Your old approval chains or posting setups may not map cleanly to Dynamics 365. For example, workflow conditions that relied on specific field values may behave differently now, or require reconfiguration using the new workflow engine.

Day-to-day tasks

Sometimes it’s as simple as a missing field or a renamed dropdown. But that can be enough to stall operations if users aren’t involved in the migration process and given time to learn the new flow.

How to avoid workflow disruption in your Dynamics 365 migration

Don’t try to copy the old system screen for screen. That’s a common mistake and it leads to frustration. Instead, map legacy processes to Dynamics 365 patterns. Focus on what the user is trying to achieve, not what the old screen looked like.

Start with your core user tasks.

- What does a finance user need to do each day?

- What about warehouse staff?

- Field service engineers?

Identify their critical workflows, then rebuild them in Dynamics 365 using native forms, views, and automation.

Review and rebuild your security model. Role-based security is at the heart of Dynamics 365. You’ll need to define duties, privileges, and roles properly, not just copy old access tables. Test for least privilege and ensure segregation of duties.

Test user scenarios in every sprint. Don’t wait for UAT to catch usability issues. Include key personas in each migration cycle. Run scenario-based smoke tests and regression checks. Use test environments that mirror production, and validate real-life tasks end to end.

Provide context and support. Users aren’t just learning a new tool — they’re changing habits built over years. Train them with use-case-driven sessions, not generic walkthroughs. Show them how the new process works and why it changed.

Migration is your chance to modernise. Don’t waste it

Your ERP isn’t just a backend system. It’s where users spend hours every day getting work done. If it doesn’t work the way they expect, adoption will suffer, and so will your transformation goals.

✅ Legacy workflows may not map to D365 one-to-one

✅ Navigation, permissions, and logic will differ

✅ Redesign processes around D365 patterns, not legacy layouts

✅ Review role-based permissions carefully

✅ Involve users early and test real scenarios every sprint

Done right, this isn’t just damage control. It’s an opportunity to rebuild your processes in a way that’s more secure, more scalable, and actually aligned with how your business works today.

Just because your old system lets you do something doesn’t mean its how your new system should work — and now’s the perfect time to make that shift. Consult our experts before you begin your migration and set the project up for success.

TL;DR

ERP projects don’t collapse because of the software — they collapse when messy, inconsistent, or incomplete data is pushed into the new system. Bad data leads to broken processes, frustrated users, unreliable reports, and compliance risks. The only way to avoid this is to treat data quality as a business priority, not an IT afterthought. That means profiling and cleansing records early, aligning them to Dynamics 365’s logic instead of legacy quirks, validating and reconciling every migration cycle, and involving business owners throughout. Clean data isn’t a nice-to-have — it’s the foundation of a successful ERP rollout.

Your ERP is only as good as your data

You can have the best ERP system in the world. But if your data is incomplete, inconsistent, or poorly structured, the result is always the same: broken processes, frustrated users, and decisions based on guesswork.

We’ve seen Dynamics 365 projects delayed or derailed not because of the software — but because teams underestimated how messy their data really was.

So let’s talk about what happens when you migrate bad data, and what you should do instead.

This is the third part of our series on ERP migration. Read more about

- Our playbook for a successful ERP migration

- How to speed up ERP migration without compromising on quality, and

- How to migrate to D365 from legacy technology

What bad data looks like in an ERP system

A new ERP system is supposed to streamline your operations, not create more chaos. But if your master data isn’t accurate, it starts causing problems right away.

Broken workflows

- Sales orders that fail because customer IDs don’t match

- Invoices that bounce because VAT settings are missing

- Stock that disappears because unit of measure conversions weren’t mapped

User frustration

- Employees waste hours trying to fix errors manually

- They lose confidence in the system

- Adoption suffers. Shadow systems start creeping in

Poor reporting

- Your shiny new dashboards don’t reflect reality.

- Finance teams can’t close the books.

- Operations can’t trust inventory figures.

- Leadership can’t make informed decisions.

Compliance risks

- Missing fields

- Outdated codes

- Unauthorised access to sensitive records

- Inconsistent naming conventions that make data hard to track

All of these can lead to audit issues or worse.

And yet, despite the stakes, data quality is often treated as a “technical detail” — something IT will sort out later. That’s exactly how costly mistakes happen.

Why data quality needs to be a business priority — not an IT afterthought

Data quality isn’t just about spreadsheets. It’s about trust, efficiency, and the ability to run your business.

Good ERP data supports business processes. It aligns with how teams actually work. And it evolves with your organisation — if you put the right structures in place.

Too many ERP projects approach migration as a technical handover: extract, transform, load. But that mindset ignores a crucial reality — only business users know what the data should say.

That’s why successful migrations start with cross-functional ownership, not just technical execution.

What good data management looks like before ERP migration

Identify and fix bad data early

Run profiling tools or even basic Excel checks to spot issues:

- duplicates,

- incomplete fields,

- outdated reference codes.

Don’t wait for them to break test environments.

Fit your data to the new system — not the old one

Legacy ERPs allowed all sorts of workarounds. Dynamics 365 has stricter logic.

You’ll need to normalise values, align with global address book structures, and reformat transactional data to fit new entities.

Validate everything

Don’t assume data has moved just because the ETL pipeline ran.

Build checks into each stage:

- record counts

- value audits

- referential integrity checks.

Set up test environments that reflect real-world usage.

Involve business users early

IT can move data. But only process owners know if it's right.

Loop in finance, sales, procurement, inventory — whoever owns the source and target data. Get their input before migration begins.

Plan for reconciliation

Post-load, run audits to confirm data landed correctly. Compare source and target figures.

Validate key reports. Fix gaps before go-live, not after.

Data quality isn’t a nice-to-have. It’s a critical success factor

ERP migration is the perfect time to raise the bar. But to do that, you have to treat data quality as a core deliverable — not a side task.

That means budgeting time and effort for:

- Cleansing legacy records

- Enriching and standardising key fields

- Testing and validating multiple migration cycles

- Assigning ownership to named individuals

- Reviewing outcomes collaboratively with business teams

When done right, good data management saves time, cost, and credibility — not just during the migration, but long after go-live.

Clean data makes or breaks your ERP project

If you migrate messy, unvalidated data into Dynamics 365, the problems don’t go away — they multiply. This looks like:

- Broken processes

- Unhappy users

- Useless reports

- Extra costs

- Compliance headaches

But with the right approach, data becomes a strength — not a liability.

- Profile and clean your data early

- Fit it to Dynamics 365, not legacy quirks

- Validate, reconcile, and document

- Involve business users throughout

- Treat data quality as a strategic priority

A clean start in Dynamics 365 begins with clean data. And that’s something worth investing in.

The good news is that these potential mistakes can be avoided early on with a detailed migration plan. Consult with our experts to create one for your team.

TL;DR:

Copilot Studio isn’t inherently expensive — unexpected costs usually come from how Microsoft’s licensing model counts Copilot Credits. Every generative answer, connector action, or automated trigger consumes quota, and without planning, usage can escalate fast. In 2025 you can choose from three models: pay-as-you-go for pilots and seasonal spikes, prepaid subscriptions for steady internal usage, or Microsoft 365 Copilot subscriptions for enterprise-wide internal agents. The key is to start small, measure consumption for 60–90 days, and then align the right model with your organisation’s actual usage. Done right, Copilot Studio becomes a cost-efficient productivity engine rather than a budget surprise.

“Why did our agent cost more than we expected?”

It’s a question many IT leaders have faced in recent months. A pilot agent launches, quickly starts answering HR questions, booking meetings, or integrating with Outlook and SharePoint. Then Finance opens the first month’s bill and quickly spots something that doesn’t add up.

The surprise rarely comes from Copilot Studio being unreasonably expensive. More often, it’s because the licensing model works differently to what Microsoft 365 administrators are used to. Without planning, credit consumption can escalate quickly, especially when generative answers, connectors, and automated triggers are involved.

In this post, we break down exactly how Copilot Studio licensing works in 2025, how credits are counted, and how to pick the right model for your organisation. We’ll also share real examples of how Copilot Studio can deliver a return on investment, helping you build a strong case for adoption.

The basics: what you must have

When planning a secure Copilot Studio rollout, it’s important to understand who needs a licence and who doesn’t.

- End-user access — Once an agent is published, anyone with access to it can use it without needing a special licence. The only exception is if they’re using Microsoft 365 Copilot (licensed separately).

- Creator licences — Anyone building or editing Copilot agents must have a Microsoft 365 plan that includes the Copilot Studio creator capability. These plans include the Copilot Studio user licence.

- Custom integrations — Included at no additional cost. This lets you build integrations so your agents can connect to internal systems, databases, or APIs beyond the standard connectors.

Three licensing options — and when to use them

Pay-as-you-go (without M365 Copilot licence)

How it works:

- Standard (non-generative) answer = $0.01 per message

- Generative answer = 2 Copilot Credits

- Action via connector/tool = 5 Copilot Credits

- Agent Flow Actions (100 runs) = 13 Copilot Credits

Additional AI tool usage

If your agents use certain built-in AI tools, these also consume credits from your licence capacity. The number of credits deducted depends on the tool type and usage level:

- Text and generative AI tools (basic) — 1 Copilot Credit per 10 responses

- Text and generative AI tools (standard) — 15 Copilot Credits per 10 responses

- Text and generative AI tools (premium) — 100 Copilot Credits per 10 responses

These deductions apply in addition to the per-credit costs outlined above for standard answers, generative answers, and actions. Make sure to factor these into your usage forecasts to avoid hitting capacity limits sooner than expected.

When to use:

- Pilots or early adoption, where usage is unpredictable

- Seasonal projects

- Avoiding unused prepaid capacity

Watch out for: Public-facing agents without access control. A customer support agent on your website could easily generate thousands of Copilot Credits a day — and a large bill.

Example: A regional HR team builds an agent to answer policy questions during onboarding periods. In January and September usage spikes, but it’s minimal the rest of the year. Pay-as-you-go keeps costs in proportion to demand.

Prepaid subscription

How it works: $200/month for 25,000 Copilot Credits at the tenant level, with the option to add pay-as-you-go for overages.

Cost efficiency compared to pay-as-you-go

This plan still counts usage in Copilot Credits, just like pay-as-you-go, but the effective rate works out at a lower cost, around $0.08 per Copilot Credit. If your organisation has steady or high Copilot Studio usage, this can offer better value than relying solely on PAYG pricing.

When to use:

- Predictable, steady internal usage

- Large organisations where agents are part of daily operations

Watch out for: Unused Copilot Credits don’t roll over. Overages revert to pay-as-you-go rates.

Example: An IT operations team runs an agent that handles password resets and system outage FAQs. Daily traffic is consistent year-round, making a prepaid subscription more cost-effective.

M365 Copilot subscription

$30/user/month for M365 Copilot — includes Copilot Studio within Teams, SharePoint, and Outlook.

Best for:

- Enterprises where everyone already uses M365

- Internal-only agents

Limitation: Agents can’t be published to public websites or customer portals.

Example: Sales uses Copilot Studio inside Teams to retrieve up-to-date product information and customer account details from SharePoint. No public access is required, so the M365 Copilot subscription covers the entire team without additional credit planning.

Copilot Credit counting: the hidden budget driver

Understanding your licence is one thing; knowing how fast you consume it is another.

.png)

Why it matters:

- Actions add up quickly — especially if your agent uses multiple connectors.

- MCP (Model Context Protocol) allows multiple tools per agent, but each action still counts.

- Triggers can be on-demand or autonomous — an agent that checks a knowledge base every morning will consume quota even if no one interacts with it.

How to increase the ROI of Copilot Studio

Licensing becomes an easier conversation when you can link it to measurable value.

Productivity gains examples:

- IT Ops: An L1 support agent resolving 200 password resets per month. Even at $0.02 per generative answer, that’s $4 — far less than the labour cost of 200 helpdesk tickets.

- HR: An onboarding agent that answers repetitive questions instantly, freeing HR staff for complex cases.

- Finance: An agent that automatically answers FAQs on expense policies during busy quarter-end periods.

- Field Operations: Scheduling and updating tasks via chat, without logging into multiple systems.

Best practice: Start with pay-as-you-go. Track Copilot Credit usage for 60–90 days to create a baseline. Then decide if prepaid makes financial sense.

Avoid these common Copilot Studio licensing mistakes

- Public agents without usage limits — ideal for engagement, dangerous for budgets.

- Overbuying prepaid packages “just in case” — unused capacity is wasted.

- Action-heavy flows — five Copilot Credits per action means complex workflows can burn through quotas quickly.

- Ignoring triggers — scheduled or event-based actions still count towards your total.

Choosing the right model

.png)

Making Copilot Studio licensing work for your organisation

Copilot Studio is one of Microsoft’s most flexible AI building tools — but that flexibility has cost implications. If you design agents without thinking about Copilot Credit counts, licence tiers, and ROI, your pilot could become an expensive surprise. If you start small, measure everything, and match the licensing model to actual use, you can turn Copilot Studio into a self-funding productivity engine.

The organisations getting the most from Copilot Studio in 2025 are not the ones simply buying licences. They are the ones designing for efficiency from the start.

Not sure which licensing model fits your use cases? Contact us to review your use case and estimate costs.

Useful resources:

TL;DR:

GPT-5 (launched 7 Aug 2025) now powers Microsoft 365 Copilot, GitHub Copilot, Copilot Studio, and Azure AI Foundry. It adds a “smart mode” for speed vs. reasoning, but reactions are mixed: slower on complex tasks, overly strict filtering, change fatigue, and licensing concerns. Still, strengths stand out — clearer outputs, better sequencing, role‑aware customisation, and solid knowledge management. To get the most out of it, use precise prompts, link it to reliable sources, and build role-specific prompt libraries. Our take: ignore the noise, test it on your real processes, and judge by outcomes, not clickbait headlines and personal opinions.

What’s happening and why it matters

When OpenAI released GPT-5, Microsoft wasted no time weaving it into its ecosystem. GPT-5 is now the default engine behind Copilot experiences across Microsoft 365, GitHub and Azure, making Copilot the front door to how most people will feel the upgrade. What makes it different is the addition of “smart mode”: dynamic routing that automatically switches between lighter, faster models for simple tasks and slower, more reasoning-heavy ones for complex, multi-step problems. You don’t need to choose; Copilot does it for you.

Licensed Microsoft 365 Copilot users got priority access on day one, with others following in phased waves. In Copilot Studio, GPT-5 is already available in early release cycles for building and testing custom agents.

Why do some people dislike GPT-5?

Online discussions around GPT-5 have been a bit chaotic. When the announcement dropped, the hype took off like wildfire: bold claims of “human-level reasoning,” “a game-changer,” even “the biggest leap since GPT-3.” But then came reality. Early adopters ran tests and the backlash followed. Here are the main pain points they mentioned:

- Overpromised, underdelivered

People expected perfection. Instead, they found a smarter but still fallible LLM. It makes fewer reasoning mistakes, but hallucinations and generic text haven’t disappeared.

- Slower on complex tasks

Deep reasoning mode can feel sluggish compared to quick drafts. That’s because it’s doing more thinking. Still, in an operations setting, slow answers can feel like lost time.

This shows up most clearly in Copilot when you’re drafting long documents or analysing complex data models.

- Too safe, too filtered

Some users noticed that Copilot holds back even on harmless internal queries, as Microsoft’s enterprise safety filters are set quite conservatively.

- Change fatigue

Frequent Copilot updates introduce variability in workflows and prompt behaviour, requiring users to continually adapt. This can disrupt established processes and create the perception of enforced adoption rather than incremental, planned change management.

- Licensing worries

Licensing itself hasn’t changed for now — GPT-5 is included in existing Microsoft 365 Copilot licences. However, heavy use of custom agents in Copilot Studio could generate additional compute or API costs, particularly in high-volume scenarios. Keep a close eye on usage metrics to anticipate potential budget impacts.

Why it’s still worth your time

If you only read LinkedIn takes, you might think GPT-5 is either magic or useless. But if you check the LMArena benchmarks — a popular platform combining crowd-sourced and in-house evaluations — you’ll see GPT-5 ranked as one of the top models, taking first place in multiple categories, such as hard prompts, coding, math, and instruction following (as of 20th of August, 2025.

In practice, this can bring tangible benefits to operations teams using Copilot:

- Better sequencing: It arranges tasks in the right order with less manual prompting.

- Improved clarity: Outputs read more like a professional SOP than a messy checklist.

- Role-specific tailoring: You can adapt outputs for different audiences, from new hires to experienced staff.

- Knowledge management: Scattered notes, Teams chats and old docs can quickly become structured guides.

- Field support: With Copilot on mobile, field workers can query internal manuals instantly, from safety procedures to troubleshooting steps. Add image upload and ticket creation, and you have lightweight expert support in the field.

- Everyday admin: Drafting project updates, onboarding packs, change announcements, policies, and even scheduling factory visits works better than in GPT-4.

In short: it’s not flawless, but it is useful — if you know how to use it.

How to make GPT-5 work for you

The difference between frustration and real value with GPT-5 usually comes down to how you use it.

The more context you give, the better the results. Instead of “Write the meeting notes,” try “Summarise the July 10 shift handover meeting, highlighting safety incidents, equipment downtime, and actions assigned to each supervisor.”

For anything going to external stakeholders or compliance teams, human review is still essential. It also pays to connect Copilot to authoritative sources like SharePoint libraries or Teams channels, and to keep those sources tidy so you’re not pulling in outdated content.

If you want consistent tone and structure, build reusable templates in Copilot Studio and share a simple prompt cheat sheet with your team — common starters like “Draft…”, “Compare…” or “Summarise…” go a long way.

Training should be role-specific, showing how GPT-5 makes day-to-day tasks easier, starting small and scaling up as confidence grows.

And perhaps most importantly, set expectations: deep reasoning is for complex, critical work, while fast mode is best for quick drafts — and in all cases, human judgement still matters.

Don’t rely on benchmarks alone — see how it performs on your tasks

GPT-5 is a meaningful upgrade that, in the right hands, can save hours of manual effort and make knowledge more accessible across teams. But it demands smart adoption: clear prompts, proper governance, and a willingness to test it against your real processes.

Ignore the noise. Don’t take the word of content creators or sales decks at face value. Put GPT-5 to work on your use cases, measure the outcomes, and decide for yourself.

Want your teams to use Copilot like a pro? Request a live workshop so you can scale operations without increasing headcount.