TL;DR

Power Apps has introduced a new AI-native authoring experience (Vibe) where users describe a business need and the platform generates a complete application with data model, screens, logic and flows. This shifts Power Apps from low-code to intent-driven, no-barrier app creation. The direction is clear: Power Apps is becoming the AI application layer in Microsoft’s ecosystem, where natural language replaces manual app building. Organisations that modernise governance and data foundations early will benefit most.

Is low-code dead?

Earlier this year at the Power Platform Community Conference, the Business and Industry Copilot keynote introduced a phrase that has become the headline of the year:

“Low code is dead as we know it.”

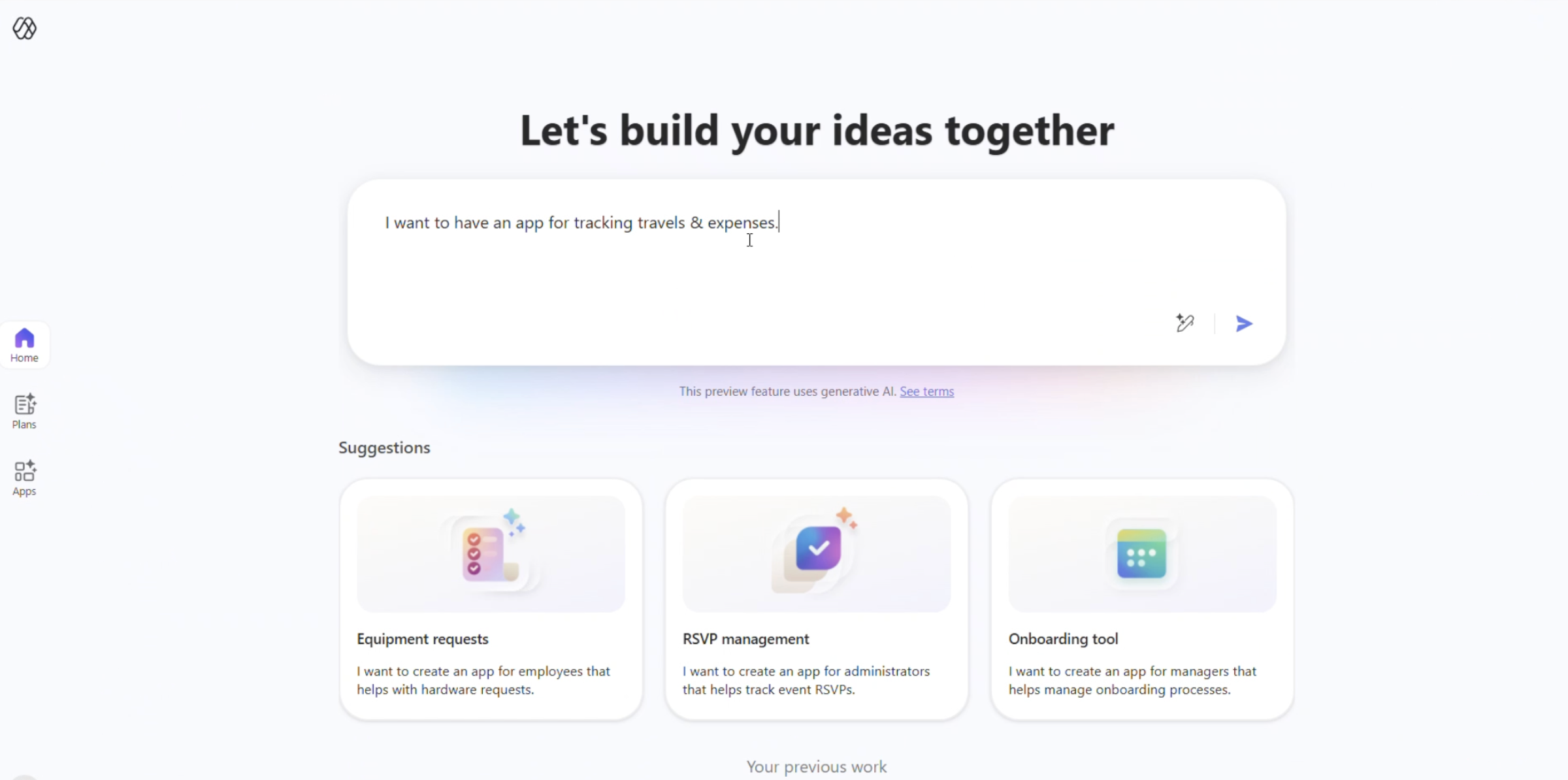

It was a recognition that the foundations of app development are changing. That shift became tangible a few weeks later at Microsoft Ignite, where Microsoft announced Vibe, the new AI-native Power Apps experience, now available through vibe.powerapps.com.

Instead of assembling screens and formulas, users now begin with intent. They describe a business problem, and Power Apps generates a working application.

This is the most significant evolution in the platform’s history, and it signals the start of a new era: AI-generated solution development.

From low-code to no-barrier

For years, Power Apps lowered the bar for business users, but the entry point was still higher than many expected. Even confident makers needed to understand components, tables, relationships, formulas and connectors. Low-code democratised development, but it didn’t eliminate complexity.

That barrier disappears in the new Power Apps experience. A user can simply type:

“I want an app to track travel and expenses.”

“I need a customer service solution.”

Within a few seconds, Power Apps produces a structured plan, a data model, screens, navigation, logic and relevant Power Automate flows. What previously required hours of design work appears almost instantly.

One message from Ignite was especially clear: the barrier to building business applications is disappearing. In the new experience, users no longer need technical skills to produce a functional app; describing the requirement is enough.

This isn’t simply an evolution of low-code tools. It marks the shift to AI generating enterprise-ready applications on demand.

A platform that codes for you

The new experience is not simply a more intelligent canvas. In Vibe Power Apps, the system generates React and TypeScript–based code structures behind the scenes. When a user asks to adjust alignment, change the theme, or add a new view, the platform updates the underlying structure and validates the changes automatically.

This makes Plan Designer more than a scoping tool. It provides a shared plan that guides how different Power Platform solutions are created. As requirements change, the plan can be updated and reused, even when apps, automations, or agents are built through different experiences.

Professional developers still play a key role, but their work shifts to higher-value layers. Instead of starting from scratch, they build on AI-generated foundations, refining implementations, integrating systems, and ensuring quality, security, architecture, and long-term sustainability.

Note: As with any evolving platform capability, the underlying implementation details may continue to change as the experience matures.

What this means for your organisation

1. Unmatched speed

Teams can now generate functional prototypes during the same meeting where ideas are discussed. This reduces the distance between identifying a need and testing a solution. Digitalisation accelerates because the early-stage friction is removed.

2. A new role for IT

As creation becomes easier, oversight becomes more important. IT transitions from being the primary builder to the orchestrator of the platform: setting standards, maintaining the data estate, defining environment strategy, enforcing DLP policies and ensuring the organisation doesn’t accumulate fragmented models or unmanaged apps.

The challenge is not that too many people can build. It’s making sure they build in a governed, consistent and secure way.

3. Data quality becomes make-or-break

AI can generate an app, but it cannot correct underlying structural issues. If organisations have duplicated tables, weak ownership or mismatched schemas, AI will amplify that complexity.

Strong data foundations, particularly the relationship between Dataverse, Fabric and line-of-business systems, become essential.

4. Higher business value

When ideas turn into runnable apps within minutes, organisations can test, iterate and validate far more frequently. This expands the capacity for innovation without expanding headcount.

Important limitations of the preview

The experience is powerful, but still early-stage. It’s currently only available in North America, so you need to set up a US developer environment if you want to experiment with it. (as of 17th December)

You should also keep in mind that:

- Apps made in the new interface cannot be edited in classic Power Apps.

- Exporting and redeploying via CLI creates a separate app rather than updating the original.

- Only one app per plan is supported today.

- Canvas and model-driven apps cannot be opened or edited in the new experience.

- Existing Dataverse tables aren’t automatically suggested, and schema editing via chat is not yet available.

- Direct code editing is intentionally restricted.

These limitations are not drawbacks; they are indicators that Microsoft is building a new category — an AI-first app development environment — that will grow alongside the traditional maker experiences.

AI as the application layer

Recent Microsoft announcements point to a broader shift across the ecosystem. Copilot is becoming the primary interface for work. Natural language is becoming the universal input. Business processes, data and applications are increasingly orchestrated behind conversational interactions.

In this future, Power Apps becomes not just a place to build apps, but the AI-native application layer that connects humans, processes and data.

Organisations that adapt early will move faster, reduce bottlenecks and empower their teams, but only if they also modernise their governance, data strategy and architectural foundations.

A turning point for the platform and for your organisation

The new Power Apps experience represents a structural shift in how digital solutions are created. The traditional act of building — dragging controls, writing formulas, configuring screens — is giving way to a world where ideas become apps instantly. Developers gain time to focus on high-value engineering. Business users gain the confidence to create. IT gains the responsibility to shape the ecosystem that makes it all run safely.

Low-code is not disappearing. But the era of manual low-code development is fading.

Intent is becoming the new development language.

Want to move from AI curiosity to real, measurable impact? Get in touch for an AI readiness workshop.

TL;DR

Most organisations want AI but aren’t ready for it. Real AI adoption means either augmenting employees with copilots or creating autonomous agents—but both require clean data, documented processes, and strong governance. If workflows live in Excel, approvals happen in chats, or data is scattered, AI has nothing reliable to operate on. Once processes are structured and people understand how to work with AI, the organisation can finally unlock decision intelligence, safe automation, and meaningful impact. AI doesn’t fail because the model is bad—it fails because the foundations aren’t there. Build readiness first, value follows.

What companies get wrong about “adding AI”

Every organisation wants to “implement AI”, but few can describe what that actually means.

Is it adding Copilot to meetings?

Automating tasks with Power Automate?

Building agents that take decisions on your behalf?

The reality is that most companies don’t yet know what they want to achieve with AI, and even fewer are ready for it. Not because they lack tools, but because their people, processes, and technology aren’t structured for AI to operate safely, reliably, and at scale.

This post breaks down, in practical terms, what organisations truly need for AI-enabled business processes, the common pitfalls we see again and again, and a clear framework your organisation can actually use to get started.

This is the second part of our series on agentic AI. Read more about

- How to get your organisation ready for agentic AI

- How to help employees adopt agentic AI

- How to improve to ROI of agentic AI

What “adding AI” really means

When most teams say they want to “add AI”, they usually mean one of two things, and each has very different requirements.

1. Extend the worker (AI-augmented work)

This is where copilots and conversational assistants truly shine: helping employees search company knowledge, summarise decisions, retrieve documents, and take routine actions. But this only works if:

- the AI actually understands your business data,

- the data is structured and governed, and

- the agent is not given decision rights that introduce risk.

The system must understand the company’s knowledge, not just respond to prompts.

2. Create autonomous workflows (AI agents)

This is the more advanced path: agents that make limited decisions, move work between systems, and act without constant human supervision.

But autonomy does not mean freedom. Governance is key. An agent should operate within a clearly defined scope and can only take business-critical decisions when it’s given clear criteria.

This distinction matters because it forces organisations to re-examine how they work. If your processes are unclear, inconsistent, or undocumented, AI will reveal that very quickly.

Before you automate anything, understand the real process

One of the first questions we ask in readiness workshops is deceptively simple:

“How does this process actually work today?”

Almost always, the answer reveals a gap between intention and reality:

- Sales opportunities tracked in Excel

- Approval steps handled informally in Teams chats

- Documents scattered across personal drives

- Edge cases handled by “whoever knows how to do it”

This is where it all breaks down. AI cannot automate a process if even humans cannot describe it. If a process isn’t documented, it's technical debt.

Another red flag is when organisations that want to “keep the process exactly as it is” and simply add AI on top. AI doesn’t work that way. If the process itself is inefficient, undocumented, or built on manual workarounds, no amount of automation will save it.

To get real value, the process must be worth automating in the first place, ideally delivering a 10x improvement when reimagined with AI.

The hidden bottleneck: your data

Every AI workflow, from copilots to autonomous agents, relies on data being structured, governed, consistent, discoverable, and stored in systems designed for long-term work.

If you’re tracking key business processes in Excel, you’re not AI-ready. Excel is brilliant for calculations, bu it is not designed for workflow execution, audit trails, role-based access, entity relationships, or system-to-system integration.

Excel is unstructured data. You cannot build AI on manual data.

The good news is that Microsoft’s systems are AI-ready by design:

- Dynamics 365 for structured sales and service processes

- Dataverse for the unified data backbone

- SharePoint for document lifecycle and governance

- Teams and Loop for shared context and collaboration

If your processes live outside these systems, your AI will operate without context, or worse, without safety.

And if your data sits in old on-premise servers? Connecting them to modern AI systems becomes slow, fragile, and expensive. AI thrives in the cloud because the cloud creates the structure AI needs.

Designing workflows where AI and humans work together, safely

Once processes are structured and data is governed, the next question is:

what should AI do, and what should humans do?

There’s a simple rule of thumb:

- High-impact, high-risk, or ambiguous decisions → human

- High-volume, low-risk, routine steps → AI

This is where human-in-the-loop design becomes essential. A well-designed AI workflow should:

- Define exactly where humans intervene

- Log every AI action for traceability

- Provide confidence scores and explanations

- Avoid overwhelming people with unnecessary alerts

- Keep the final accountability with the human owner

Humans should use judgement, handle exceptions, and ensure ethical and correct outcomes. AI should do the repetitive work, the data consolidation, and the first pass of tasks.

AI readiness is also about people, not just systems

One of the most underestimated aspects of AI readiness is human behaviour. For AI to work as intended, business users must:

- Be curious

- Know how to break their work into steps

- Be willing to adapt workflows

- Understand where data lives

- Ask questions and refine prompts

- Avoid bypassing the process when “it’s easier to do it my way”

Processes fail when people resist the change because they don’t understand the “why”. And they fail just as quickly when employees work around the automation or keep using personal storage instead of governed systems.

AI introduction is as much a cultural shift as it is a technical programme.

What you can finally ask once AI-readiness is achieved

Once the foundations are in place, people begin asking questions that were previously impossible:

“Which of our suppliers pose the highest risk based on the last 90 days of invoices?”

“What decisions were made in the last project meeting, and who owns them?”

“Show me opportunities stuck for more than 30 days without activity.”

“Draft a customer update using the last three emails, the CRM history, and the contract.”

“Alert me when unusual patterns appear in our service requests.”

These are questions an agent, not a chatbot, can answer. But only if the process is structured and the data is clean.

AI doesn’t fail because the model is bad. It fails because the organisation isn’t ready

Before building agents, copilots, or automations, ask yourself:

- Would AI understand our processes, or would it get lost in exceptions?

- Is our data structured, governed, and accessible?

- Do our people know how to work with AI, not around it?

- Are we prepared to support safe, auditable, and reliable AI operations?

If the answer is “not yet”, you’re not alone. Most organisations are still early in their readiness journey. But once the foundations are there, AI value follows quickly, safely, and at scale.

Want to move from AI curiosity to real, measurable impact? Get in touch for an AI readiness workshop.

TL;DR

90% of organisations aren’t ready for agentic AI because their people, processes, and technology are still fragmented. Before building copilots or custom agents, companies must become data-first organisations: establishing strong governance, integrating foundational systems, and replacing manual processes with structured, interconnected workflows. With agentic blueprints and a proven methodology grounded in value, architecture, and design patterns, Microsoft-centric organisations can gain AI value faster and more safely.

90% of organisations aren’t AI-ready. Are you?

Everyone is talking about AI agents — or copilots — that summarise meetings, answer questions, trigger workflows, and automate the routine.

Most organisations believe they’re “ready for AI” because they use ChatGPT or Copilot. But agentic AI only becomes valuable when the foundations are in place — governed data, consistent processes, and interconnected systems.

In reality, around 90% of organisations aren’t there yet. Their data lives in silos, processes run on spreadsheets, and collaboration happens in unstructured ways that AI cannot interpret.

So before you rush to “add AI,” stop for a moment. Is your organisation truly ready for an agentic AI strategy, or are you still running on Excel?

This is the first part of our series on agentic AI. Read more about

- How to introduce AI into your business processes

- How to help employees adopt agentic AI

- How to improve to ROI of agentic AI

From automation to augmentation

Many companies start here: Sales teams track opportunities in Excel. Documents live in personal folders. Collaboration happens over email or private Teams chats.

It works until it doesn’t. Because when you ask, “Can we plug AI into this?” the answer depends entirely on how your work is structured.

For AI to deliver value, your processes and data need to be consistent and governed. If information sits in silos or moves around without clear ownership, no Copilot will sort it out for you.

Step 0: Look at how you work

AI can only operate within the workspace it lives in. Before talking about technology, ask a simple question: How does your team actually get work done every day?

- Where do we keep track of our work, such as opportunities, sales/purchase orders, contacts, customers, and contracts?

- Who updates them?

- How are documents stored, shared, and versioned?

If the answer includes “Excel,” “someone keeps a list,” or any other manual step, that’s not AI-ready. Manual tracking makes automation impossible and governance invisible.

When we assess readiness, we start by examining your value patterns: how your teams create value across people, process, and technology. These patterns reveal which activities need to be structured into systems that log every action consistently. Only then can an agent analyse, predict, and assist.

Microsoft’s modern workspace is AI-ready by default

Microsoft’s modern workspace, including SharePoint, Teams, Loop, Dataverse, and Copilot, is already agent-ready by design.

Chat, files, and meeting notes create structured, secure data in the cloud. When your team works in this environment, an AI agent can see what’s happening, and safely answer questions like:

- “What was decided in the last project meeting?”

- “Show me invoices from vendors in Q3.”

- “Which opportunities need follow-up this week?”

With even basic tools, you can achieve impressive results. A simple SharePoint automation can pull in invoices, let AI read and structure them into columns (supplier, invoice number, amount), and feed the data into Power BI, all in an afternoon.

Step 1: governance first, AI second

When someone logs into Copilot and asks a question, Copilot will find everything they can access. That’s both the promise and the risk, without strong data loss prevention, AI may surface information you never intended to expose.

This is why governance is the first pillar of any AI readiness strategy, and it’s the foundation of our value–architecture–design pattern methodology. Without clear ownership, access, and data controls, no agent can operate safely.

When we run readiness audits, the first questions aren’t about models or copilots — they’re about access and accountability:

- Who owns each SharePoint site?

- Who has edit rights?

- Is sensitive data over-shared across Teams?

- What happens if a site owner leaves the company?

The good news is that Microsoft’s audit tools automatically flag ownership gaps, oversharing, and risky access patterns so you can act before an AI ever touches the data.

Step 2: structure your business data

Even with strong governance, your data still needs structure. AI can read unstructured notes and spreadsheets, but it can’t extract meaningful insights without a consistent data model.

This is where Microsoft's data ecosystem helps. Their tools connect sales, service, finance, and other processes into a single, governed data layer. Every record — contact, invoice, opportunity — sits in one place with shared logic.

Structuring business data turns your architecture patterns into reality. When CRM, ERP, SharePoint, and collaboration systems are interconnected, you create the unified backbone that agentic workflows rely on.

And that’s where agentic AI truly begins. You can build agents that review opportunities, identify risks, and recommend next steps based on the clean, consistent data flowing through Microsoft 365.

Step 3: from readiness to reality

Once the foundation is solid, the strategy becomes clear:

- Audit your workspace and permissions.

- Standardise how data is collected and stored.

- Govern collaboration and access through Teams and SharePoint admins.

- Enable your first agent — a Copilot, chatbot, or custom agent using Copilot Studio — to assist in everyday processes.

From there, you can start to ask more ambitious questions:

- Which of our processes could an agent safely automate?

- How do we combine Copilot with custom workflows to handle domain-specific tasks?

- What guardrails do we need so that AI doesn’t just act, but acts responsibly?

You also don’t have to start from scratch. Our proprietary baseline agents for Microsoft ecosystems cover common enterprise scenarios and act as accelerators, reducing implementation time and giving you a proven foundation to tailor AI behaviour to your organisation.

Want to learn more? Come to our free webinar.

The right question isn’t “how fast”, it’s “how ready”

Every organisation wants to move fast on AI. But the real differentiator isn’t how early you adopt, it’s how prepared you are when you do.

For small teams, AI readiness can be achieved in weeks. For large, established enterprises, it’s a transformation touching governance, data models, core systems and ways of working.

So before asking “How soon can we deploy an agent?” ask instead:

- “Would our current systems help or confuse the AI?”

- “Can we trust the AI to find, but not expose our data?”

- “Do our people know how to work with agents, not against them?”

That’s what an agentic AI strategy really is. Not just technology, but the deliberate design of trust, control, and collaboration between humans and AI.

Before you deploy agents, build the trust they need to work

AI adoption is no longer about experimentation. It’s about building the right foundations — governance, structure, and readiness — so your first agents don’t just answer questions, but deliver real, secure value.

Agentic AI starts with readiness. Once your systems, data, and people are ready, intelligence follows naturally.

We help Microsoft-centric organisations move from AI-curiosity to real impact, creating environments where AI agents can operate safely, efficiently, and intelligent.

Join our free 45-minute webinar — we’ll walk you through how to get AI-ready in 90 days.

TL;DR:

Microsoft Fabric doesn’t work like a simple “per-user licence”. Instead, you buy a capacity (a pool of compute called Capacity Units, or CUs) and share that across all resources (lakehouses, SQL servers, Notebooks, pipelienes, Power BI reports etc.) you use inside fabric for (data engineering, warehousing, dashboards, analytics). On the top of this, you separately pay for storage and certain user licences if you publish dashboards. The smart path: start small, track your actual consumption, align capacity size and purchasing model (pay-as-you-go vs reserved) with your usage pattern, so it becomes cost-efficient rather than a budget surprise.

What makes Fabric’s pricing model different

If you’re used to licencing models for analytics tools such as per-user dashboards or pay-per-app, Fabric introduces a different mindset. It’s no wonder many teams are confused and asking questions like:

“Will moving our Power BI set-up into Fabric make our costs spiral?”

“We’re licensing for many users. Do we now have to keep paying per user and capacity?”

“What happens when our workloads spike? Will we pay through the roof?”

Reddit users already ask:

“Can someone explain me the pricing model of Microsoft Fabric? It is very complicated …”

So yes, it’s new, it’s different, and you should understand the mechanics before you start using it.

This is the fourth part of our series on Microsoft Fabric. Read more about

- How Fabric changes enterprise data and AI projects

- When to start using Fabric, and

- How to set up your first Fabric workplace

The basics: what you must understand

Here are the key building blocks:

Availability of capacity = compute pool

Fabric uses “capacity” measured in CUs (Capacity Units). For example, an “F2” capacity gives you 2 CUs, “F4” gives 4 CUs, and so on up to F2048.

That pool is shared by all workloads in Fabric: data flows, notebooks, lakehouses, warehousing, and dashboards.

Important: you’re not buying a licence per user for most functionality, you’re buying compute capacity. It’s also important to note that if you are usign a Pay-as-You-Go model you’ll pay whenever the capacity is turned on (whether you are actively using it or not). You’ll be billed based on the time (minutes) the capacity was running and it doesn't matter if you were using all or none of your CU-s.

Storage is separate

Storage (via OneLake) is billed separately per GB. The capacity you buy doesn’t include unlimited free storage.

There are additional rules e.g. free mirroring storage allowances depending on capacity tier.

User licences still matter, especially for dashboard publishing

If you use Fabric and you want to publish and share dashboards via Power BI, you’ll still need individual licences (for authors/publishers) and in certain cases viewers may need licences depending on capacity size.

So compute + storage + user licences = the full picture.

Purchasing models: pay-as-you-go (PAYG) vs reserved

- Pay-as-you-go: You pay for the capacity per hour (minimum one minute) as you run it, and you can scale up/down. Good if usage is variable.

- Reserved capacity: You commit to a capacity size for a year (or more) and get ~40% discount—but you pay whether it’s used or not. Good if your workloads are steady.

How to pick the right model and avoid common mistakes

1. Track your workload and consumption before committing

Because you’re buying a pool of compute rather than per-user seats, you need to know how many jobs, how many users, how many refreshes, how much concurrency you’ll have.

For example: if you pick a small capacity and your workloads goes beyond that, Fabric may apply “smoothing” or throttle. So start with PAYG or small capacity, track consumption for 60-90 days.

2. Ask the right questions up front

- “Will our usage spike at month-end or quarters?”

- “How many users are viewing dashboards vs authoring?”

- “How many data transformation jobs run concurrently?”

- “Can we pause capacity on nights/weekends or non-business hours?”

If you don’t answer these, you risk buying too much (wasted spend) or too little (performance issues).

3. Beware the “viewer licence” threshold

There’s a key capacity size (F64) where things change: For capacities below F64, users may still need separate Power BI PRO licences for report or dashbaord consumption; at F64 and above, viewers may not need individual licences.

If you migrate from an older Power BI model into Fabric without checking this, you could pay more.

4. Storage charges can sneak in

Large datasets, duplicates, backup snapshots, and deleted workspaces (retention periods) all consume storage. Storage costs may be modest ($0.023 per GB/month as of November 2025) compared to compute, but with volumes they matter.

Also, networking fees (data transfer) are “coming soon” as a Fabric cost item.

5. Don’t treat capacity like a fixed server

Because Fabric allows bursting (temporarily going above your base capacity) and smoothing (spreading load) your cost isn’t purely linear with peak loads. But you still pay for what you consume, so design workloads with efficiency in mind (incremental refresh, partitioning, avoiding waste).

A simplified checklist for your team

- Audit actual workloads: data jobs, refreshes, user views.

- Choose initial capacity size: pick a modest SKU (F4, F8) for pilot.

- For smaller solutions (below F64) it might make sense to combine Fabric PAYG and Power BI PRO solutions to get the best performance and price.

- Run PAYG for 60-90 days: monitor CUs used, storage, spikes.

- Analyse when you hit steady-state usage (>60% utilisation) → consider reserved capacity.

- Map user roles: who authors dashboards (needs Pro/PPU licence), who views only (maybe Free licence depending on capacity).

- Optimise data architecture: incremental loads, partitioning, reuse instead of duplication.

- Monitor monthly: CUs consumed, storage growth, unused capacity, aborted jobs.

- Align purchase: Scale up/down capacity ahead of known events (e.g., month-end), pause non-prod when idle.

Frequently-asked questions

“Will moving our Power BI setup into Fabric make our costs spiral?”

Not necessarily — if you migrate smartly. If you move without revisiting capacity size/user licences you could pay more, but if you use this as an opportunity to right-size, share compute across workloads, optimise refreshes and storage, you might actually get better value.

“Do I need a user licence for every viewer now?”

It depends on your capacity size. If your capacity is below certain thresholds, viewers may still need licences. At F64+ capacities you may allow free viewers for published dashboards. Monitor your scenario.

“What happens if we only run analytics at month-end? Can we scale down otherwise?”

Yes, with PAYG you can scale down or pause capacity when idle, hence paying only when needed. With reserved you lock in purchase. Choose based on your workload patterns.

“Is storage included in capacity cost?”

No, storage is separate. You’ll pay for OneLake storage and other persistent data. If you have massive data volumes, this needs budgeting

Get Fabric licensing right from the start

While Microsoft Fabric’s licensing and pricing model may feel unfamiliar compared to traditional per-user or per-service models, it offers substantial flexibility and potential cost-efficiency if you approach it intentionally. Buy capacity, manage storage, plan user licences, and do the tracking in the early phase.

Teams that treat this as an after-thought often get surprised by bills or performance constraints. Teams that design for efficiency from the start get a shared analytics and data-engineering platform that truly scales.

Licensing clarity is the first step to a smooth Fabric journey.

Our experts can assess your current environment, model different licensing scenarios, and build a right-sized plan that keeps costs predictable as you scale.

TL;DR

Use our step-by-step guide to start using Fabric safely and cost-effectively, from setting up your first workspace and trial capacity to choosing the right entry path for your organisation. Build a lakehouse, connect data, create Delta tables, and expand gradually with pipelines, Dataflows, and Power BI. Whether you’re starting from scratch or integrating existing systems, it shows how to explore, validate, and adopt Fabric in a structured, low-risk way.

How to start using Fabric safely and cost-effectively

Before committing to a full rollout, start small. Activate a Fabric trial or set up a pay-as-you-go capacity at a low tier (F2–F8). These are cost-effective ways to explore real workloads and governance models without long-term commitments.

Begin by creating a workspace. From here, you can take one of two paths: starting fresh or integrating with what you already have.

1. Starting fresh (greenfield)

If you don’t yet have a mature data warehouse or analytics layer, Fabric lets you build the essentials quickly with minimal infrastructure overhead.

You can:

- Create a lakehouse in your workspace

Import sample data (e.g.: ready to use Contoso data) or upload Excel/CSV files

- Explore them with SQL or notebooks

This gives you a safe sandbox to understand how Fabric’s components interact and how data flows between them.

2. Integrating with what you already have

Most organisations already have data systems — SQL databases, BI tools, pipelines, or on-prem storage. Keep what works; Fabric can extend it.

You can:

- Use Dataflows Gen2 or pipelines to ingest and transform data from existing sources

- Create OneLake shortcuts to reference external storage

- Bring in exports or snapshots (for example, CRM tables or logs)

- Use Fabric as an analytical or orchestration layer on top of your current systems

This hybrid approach lets you test Fabric on real data without disrupting production systems, helping you identify where it delivers the most value before scaling further.

Next steps once you’re up and running

After choosing your entry path, expand iteratively. Fabric rewards structure, not speed.

Add ingestion and transformation

Continue shaping data with Notebooks, Dataflows Gen2 or pipelines, schedule refreshes, and test incremental updates to validate performance.

Expose for analysis

Create a warehouse or semantic model, connect Power BI, and check performance, permissions, and security. Involve your Power BI administrators early — Fabric changes how capacities, roles, and governance interact.

Introduce real-time scenarios

Connect streaming sources, create real-time tables or queries, and trigger alerts or automated actions using activators.

Advance to AI and custom workloads

Train and score models in notebooks, or use the Extensibility Toolkit to build custom solutions integrated with pipelines.

Govern, monitor, and iterate

Apply governance policies, monitor cost and performance, and use CI/CD with Git integration to manage promotion across environments and maintain auditability.

Core Fabric building blocks and how to use them

Lakehouses & delta tables

Lakehouses in Fabric combine data lake flexibility with analytic consistency. Under the hood, Fabric stores everything in Delta Lake tables, which handle updates and changes reliably without breaking data consistency.

You can ingest raw files into lakehouse storage, define structured tables, and then query them with SQL or Spark notebooks. Use delta features to handle changes and versioning.

Pipelines & Dataflows

Fabric includes pipeline orchestration similar to Azure Data Factory. Use pipelines (copy, transformation, scheduled) for heavier ETL/ELT workloads.

Use Dataflows Gen2 (Power Query–style) for lighter transformations or data prep steps. These can be embedded or called from pipelines.

If you prefer a pro-code/code-first solution you can use PySpark, SparkSQL or even a simple python code to transform and analyse your data in a Notebook.

Together, they let you build end-to-end ingestion workflows, from source systems into lakehouses or warehouses.

Warehouses & SQL query layer

Once data is structured, you may want to provide a SQL query surface. Fabric lets you spin up analytical warehouses (relational, MPP) to serve reporting workloads.

These warehouses can sit atop the same data in your lakehouse, leveraging delta storage and ensuring you don’t duplicate data.

Real-time intelligence

One of Fabric’s differentiators is built-in support for streaming and event-based patterns. You can ingest event streams, process them, store them in real-time tables, run KQL queries, and combine them with historical datasets.

You can also define activators or automated rules to trigger actions based on data changes (e.g. alerts, downstream writes).

Data science & AI

Fabric includes native support for notebooks, experiments (MLflow), model training, and scoring. You can ingest data from the lakehouse, run Python/Spark in notebooks, train models, register them, and score them at scale.

Because the same storage underlies all workloads, you don’t need to copy data between ETL, analytics, and AI layers.

Extensibility & workloads

For development teams or ISVs, Fabric supports custom workload items, manifest definitions, and a DevGateway. Microsoft provides a Starter-Kit that helps you scaffold a "HelloWorld" workload to test in your environment.

You can fork the repository, run local dev environments, enable Fabric developer mode, and build custom apps or tools that operate within Fabric’s UI.

Common scenarios and example workflows

Speeding up Power BI reports

Move slow or complex dataflows into a lakehouse, define delta tables, and connect Power BI directly for faster, incremental refreshes.

Real-time monitoring

Ingest IoT or application logs into real-time tables, run KQL queries to detect anomalies, and trigger automated alerts or actions as events occur.

Predictive analytics

Use lakehouse data to train and score models in notebooks, then surface results in Power BI for churn, demand, or risk forecasting — all within Fabric.

Custom extensions

Build domain-specific tools or visuals with the Extensibility toolkit and integrate them directly into Fabric’s workspace experience.

Best practices and things to watch out for

Data discipline matters — naming, ownership, and refresh planning remain essential. Start small and build confidence. Begin with one or two use cases before expanding.

Treat migration as iterative; don’t aim to move everything at once. Sync with your BI and governance teams early, as changes in permission and capacity models affect all users.

Use Microsoft’s Get started with Microsoft Fabric training. It walks you through each module step by step. And take advantage of the end-to-end tutorials covering ingestion, real-time, warehouse, and data science flows.

Fabric delivers the most value when aligned with your goals. Our team can help you plan, pilot, and scale it effectively — get in touch to get started.

This is the third part of our series on Microsoft Fabric. Read more about

- How Fabric changes enterprise data and AI projects

- When to start using Fabric, and

- The basics of Fabric licensing

Resources:

https://learn.microsoft.com/en-us/training/paths/get-started-fabric/

TL;DR

Fabric is emerging as the next-generation data and AI platform in Microsoft’s ecosystem. For growing organisations, the real question isn’t if you’ll use it — it’s how to get ready so that the investment delivers value fast. Signs you’re ready to begin: if your reporting relies on too many Excel files, your Power BI dashboards are slowing down, or pulling consistent data from different tools has become time-consuming and expensive. It’s also time to explore Fabric if your infrastructure and maintenance costs keep rising while insights stay stuck in silos.

“Where should we begin if we’re new to Microsoft Fabric?”

Whether you’re at the start of your analytics journey or already running tools like Synapse, Databricks, or Data Factory, Fabric offers a unified, scalable platform that connects your data and prepares you for AI. Not every organisation will start from the same place, and that’s okay.

Start small. Use a trial or low-tier capacity, connect one key dataset, define clear goals of your POC and evaluate performance. Hold off only if your organisation lacks data discipline or governance maturity. The earlier you begin experimenting, the smoother your transition when Fabric becomes the foundation of enterprise data operations.

This is the second part of our series on Microsoft Fabric. Read more about

- How Fabric changes enterprise data and AI projects

- How to set up your first Fabric workplace, and

- The basics of Fabric licensing

“Can Fabric live up to its ‘all-in-one’ claims? Is now the right time to jump in?”

It’s a question many teams are asking as Microsoft pushes Fabric into mainstream adoption.

You’ve seen the demos, read the roadmap, and perhaps even clicked that ‘Try Fabric’ button — but readiness is key.

If you adopt it before your organisation is prepared, you’ll spend more time experimenting than gaining value. If you start using it too late, your competitors will already be using Fabric to run faster, cleaner, and more scalable data operations.

Why timing matters for Microsoft Fabric adoption

If your team already uses Microsoft 365, Power BI, or Azure SQL, Fabric is the natural next step. It brings all your data and analytics together in one secure, cloud-based platform, without adding another layer of complexity.

For many organisations, the real challenge isn’t collecting data — it’s connecting it. You might be pulling financials from SAP or Dynamics, customer data from an on-premises CRM, and operational data from a legacy ERP or manufacturing system. Each of those tools stores valuable information, but they rarely talk to each other in real time.

Fabric bridges that gap by creating a single, governed layer where all your data can live, be analysed, and feed AI models, while still integrating smoothly with your existing Microsoft environment. It brings together what used to be separate worlds:

- Synapse for warehousing,

- Data Factory for pipelines,

- Stream Analytics for real-time data, and

- Power BI for reporting.

Historically, these ran as siloed services with their own governance and performance quirks.

Fabric replaces this patchwork with one SaaS platform, powered by OneLake as a shared data foundation. That means one copy of data, one security model, and one operational playbook. Less time reconciling permissions, fewer brittle integrations, and a unified line of sight on performance.

For IT Operations, this changes everything. Instead of maintaining scattered systems, teams move towards proactive enablement, governance, monitoring, and automation.

The real challenge isn’t understanding what Fabric can do; it’s knowing when your environment and team are ready to make the move.

Questions like this keep surfacing across forums like Reddit:

“Do we actually have the right skills and governance in place to use Microsoft Fabric properly?”

Let’s see what readiness really looks like in practice.

Signs your organisation is ready for Microsoft Fabric

You don’t need a massive data team or an AI department to benefit from Fabric. What matters is recognising the early warning signs that your current setup is holding your business back.

You’re running the business from Excel (and everyone’s version is different)

If every department builds its own reports, and the CEO, finance, and sales teams are never looking at the same numbers, Fabric can unify your data sources so everyone works from one truth.

Your Power BI reports are slowing down or getting hard to maintain

When dashboards take too long to refresh or adding new data breaks existing reports, you’ve outgrown your current setup. Fabric helps you scale Power BI while keeping performance and governance under control.

Reporting takes days and still raises more questions than answers

If your analysts spend more time moving files than analysing data, you’re ready for a platform that automates data movement and standardises reporting.

Your IT costs are rising, but you’re still not getting better insights

Maintaining servers, SQL databases, or patchwork integrations adds cost without adding value. Fabric replaces multiple systems with one managed platform, reducing overhead while enabling modern analytics and AI.

You want to use AI responsibly, but don’t know where to start

Fabric integrates Microsoft’s Copilot and data governance tools natively. That means you can explore AI safely — with your data, under your security policies — and our team can help you get there, step by step.

When to wait before adopting Microsoft Fabric

There are good reasons to hold off, too. If your organisation still lacks basic data discipline — no clear ownership, inconsistent naming, or ungoverned refreshes — Fabric will only amplify those gaps. Similarly, if you rely heavily on on-premises systems that can’t yet integrate with Fabric, the return on investment will be limited.

And if your team has no Fabric champions or time for upskilling, start with a pilot. Microsoft’s learning paths and community are growing by the day, but this is a platform that rewards patience and structure, not a rush to go all-in at once.

Even if you’re not ready today, you can start preparing by defining who owns your key datasets, documenting where your data lives, and assigning a small internal champion for analytics.

These are the first building blocks for a successful Fabric journey — and we can help you set them up before you invest in the platform itself.

Put real-time intelligence and AI agents to work

Once you’ve built a foundation of data discipline and readiness, Fabric starts to show its real power.

Fabric enables real-time visibility into your operations, whether that means tracking inventory levels, monitoring production metrics, or getting alerts when something in your system changes unexpectedly. Instead of waiting for a daily report, you can see what’s happening as it happens.

And AI capabilities go far beyond Copilot. Fabric introduces data agents: role-aware assistants that enable business users to explore and query data safely, without adding reporting overhead for IT. The result is true self-service intelligence, where operations teams can focus on governance, performance, and optimisation instead of ad-hoc report requests.

Start before you need it, but not before you’re ready

Teams that start experimenting with Fabric now will be the ones setting best practices later. Microsoft’s development pace is relentless, and Fabric’s roadmap is closing the gaps between data, analytics, and AI at speed.

Fabric adoption isn’t a switch-on event. It comes with a learning curve. The earlier you begin, the easier it becomes to standardise governance, establish naming conventions, and embed Fabric into CI/CD and monitoring pipelines.

If your analytics stack feels stretched, your infrastructure spend is rising, or your governance model needs a reset, it’s time to start testing Fabric. Do it deliberately, in small steps, and you’ll enter the next generation of Microsoft’s data platform on your own terms.

Ready to see how Microsoft Fabric could work for your organisation?

Whether you’re just exploring or planning a full modernisation, our team can help you map your current data landscape and guide you step-by-step through a safe, structured adoption.

Book a free Fabric readiness assessment.